We're continuing to work with investigative reporters to research unscrupulous activity on social media. Most recently, Engadget published a piece on nefarious political influencers on Reddit. We’ve written in the past about analyzing social media comments, but didn’t make the ingesters publicly available. With an increasing need for research in this area, we decided that releasing our Reddit and Hacker News ingesters could help new users get started with Gravwell even faster, so we open-sourced them. Read on to learn how to get the ingesters, how to run them, and how to get started with the data.

Installing the Ingesters

Assuming you have Go installed, you can fetch the ingesters by simply running the following:

go get github.com/gravwell/ingesters/reddit_ingester

go get github.com/gravwell/ingesters/hackernews_ingester

This should compile the ingesters and drop them in $GOPATH/bin, which by default will be ~/go/bin. If you haven’t done so already, we recommend adding this to your $PATH for convenience.

Running the Ingesters

These ingesters are quite simple compared to the “official” Gravwell ingesters (Netflow, Simple Relay, etc). Rather than running them as daemons, we like to just start them in a terminal window or tmux session and kill the process when we’re done. We’re going to assume that Gravwell is installed on the local machine; if not, simply change 127.0.0.1 in the following examples to your Gravwell instance’s IP. You’ll also need your “ingest secret”, defined in the Ingest-Auth field of gravwell.conf; for these examples, we’ll use “MyIngestSecret”

The following command will start the Reddit ingester, fetching all Reddit comments as they are posted and ingesting them with the “reddit” tag:

reddit_ingester -clear-conn 127.0.0.1:4023 -ingest-secret MyIngestSecret

To start the Hacker News ingester (ingesting to the “hackernews” tag), we use a similar command:

hackernews_ingester -clear-conn 127.0.0.1:4023 -ingest-secret MyIngestSecret

Both ingesters store data in JSON format, so a review of the JSON module documentation may be useful as you begin your analysis. The example analysis below focuses on Reddit data, but Hacker News data will be analyzed the same way: identify JSON fields of interest, extract them, and operate on the extracted fields.

Analyzing the Results

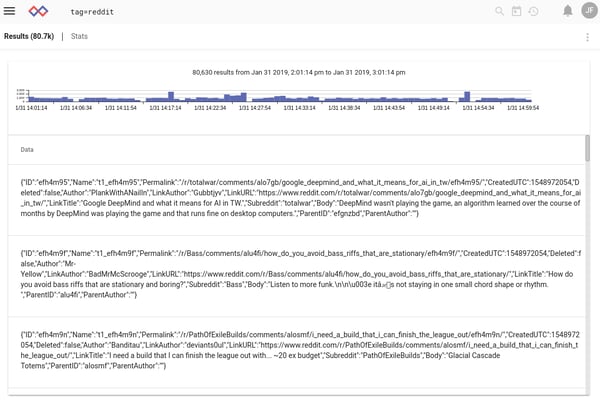

Once we’ve got data flowing in, we can begin analysis. Let’s start by just looking at some raw Reddit entries, by searching “tag=reddit” over the last hour:

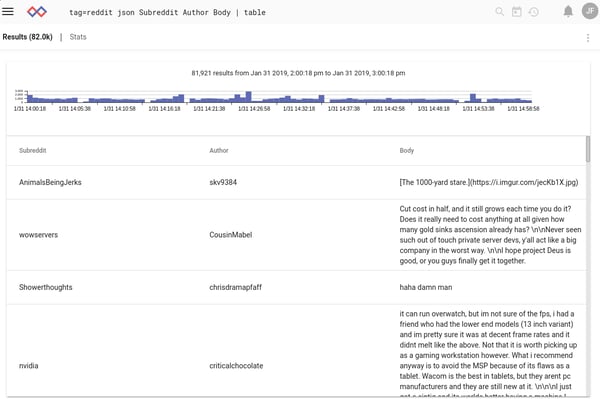

The entries are coming in as JSON. That’s good! We have a JSON parser built into Gravwell! We can do things like pull out the subreddit, author, and comment body for slightly less dense investigation:

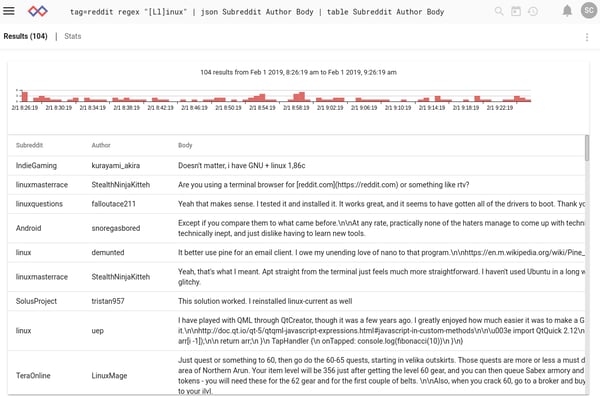

That’s just presentation, though. Maybe we want to see what people are saying about Linux, and where they’re saying it. We can use the regex module to only return results containing the word “Linux” or “linux”:

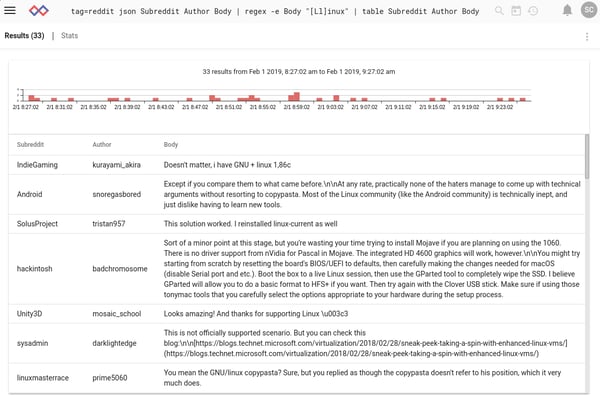

You may notice a weakness: this search turned up a user with “Linux” in their name, even though the comment has nothing to do with the operating system. We can try to remedy this with a slight tweak, by searching on the contents of the enumerated value “Body” rather than the whole entry:

That’s better! Now we can go a step further and see which subreddits are talking about Linux the most. We do this by sticking the “count” module in the pipeline after grep. Grep narrows us down to only those entries containing the word “Linux” in the body, and then count counts how many entries there are for each value of the “Subreddit” enumerated value.

The results are essentially what you'd expect, centering on Linux and gaming subreddits.

Analyzing Hacker News

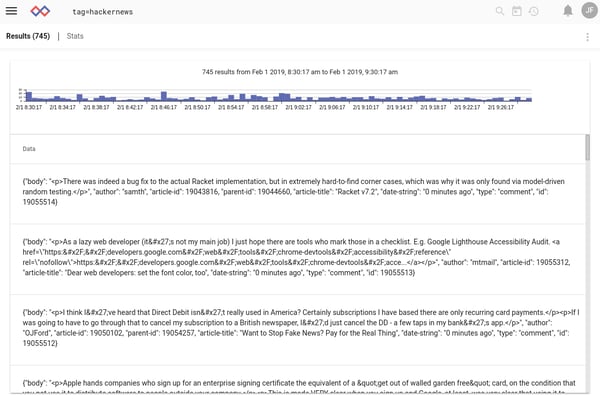

We can apply similar techniques to Hacker News comments, which are also JSON-formatted:

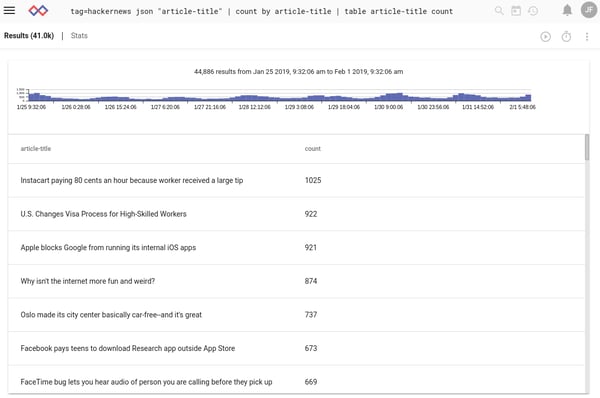

For example, we can see which stories received the most comments in the last week:

Conclusion

We hope this short introduction to the Reddit and Hacker News ingesters spurs you to do some experimentation of your own! These tools are a great way to get started with Gravwell Community Edition if diving straight into Netflow and such seems intimidating. If you find some interesting data or make cool dashboards, be sure to email info@gravwell.io and tell us about it!