Huge Gravwell updates today!

Thanks for your patience during this short period of radio silence, but it’s been for good reason. Today we’re happy to announce Gravwell version 3 which comes with a whole slew of delicious features and improvements.

The 2018 development year was primarily focused on improving search and ingest performance, scalability, and stability. We’ve made tremendous strides on this front and I’m excited to talk briefly about those here and in greater detail during the coming weeks. Our 2019 has a strong focus on improving out-of-the-box functionality -- keep reading for more info about the update and exciting plans for this year.

All new user interface

The UI has been completely rebuilt. We expanded our GUI team and the newcomers have done a bang-up job at improving UX and making things pretty. For you nerds, Google is sunsetting the “AngularJS” project so we had to move to “Angular” (awesome names, I know). We took that opportunity to switch charting libraries, improve some workflows, and set ourselves up for a fantastic 2019 of GUI work.

Some highlights:

- Improved search investigation workflow

- Custom Interface and chart themes (dark theme for all my emo nerds out there)

- Improved charts and data renderers

- Improved entry displays like pretty-printing JSON and Tables with auto-columns

- “Live” searches and dashboards that auto-update for you

- Enhanced visibility over Gravwell cluster infrastructure

- Logbot now greets you when you login :D

- 3D Globes and 3D globe heat maps for eye candy

- Improved FDG visualizations and controls

- Downloadable charts

- Better mobile support

- Some French je ne sais quoi

- Internationalization

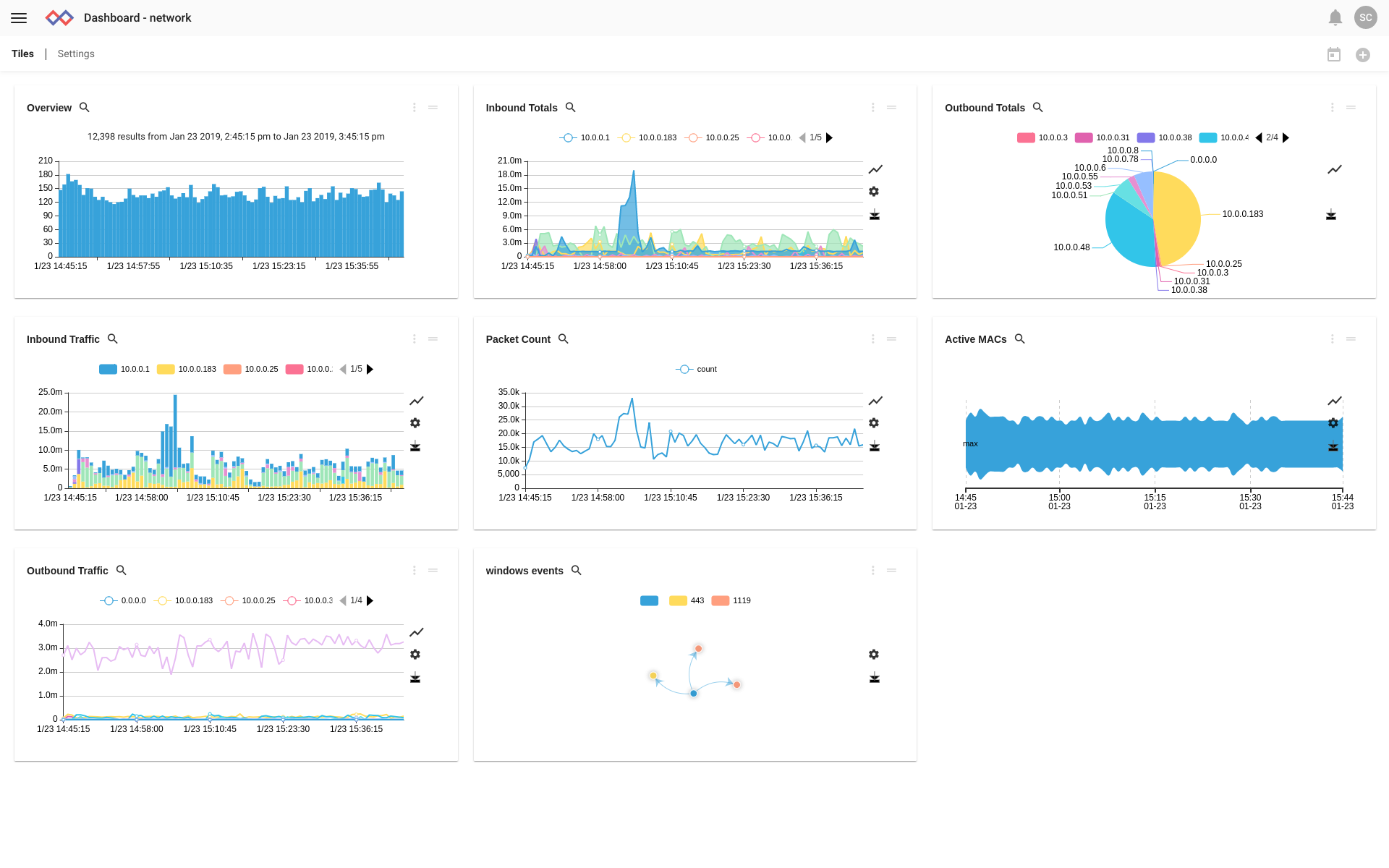

This dashboard helps a CE user monitor their home network for anomalous activity and wifi leechers

This dashboard is of our own making and monitors our company brewing process.

Here is our fine Idaho Wildflower IPA in the making.

Serious Backend Muscle

Some really powerful capabilities have turned our temporal structure-on-read indexing system into a hybrid model that dramatically speeds up “needle-in-haystack” searching. We’ve incorporated a modular “acceleration” system that can use bloom filters for low storage overhead speedups or a full indexing solution to speedup almost any query, all on top of our already amazing distributed time series capabilities. The modularity is important because it means organizations get exactly the tradeoff they want when it comes to the age old question of indexing: disk usage vs performance.

To give you a little bit of context around why this is so awesome, one exciting project we built out in the past couple months has been a deployment doing over a half a petabyte of data ingest per week. Initially, we handled aggs just fine but doing needle-in-haystack searches was painful when looking over larger periods of time. The Gravwell dev team really stepped it up and beefed up our modular indexing to accommodate. Bloom filters resulted in less than 5% disk overhead on the ingest but the performance wasn’t there given this extreme volume of data, so we swapped to a full indexing acceleration module instead. With intelligent field selection, the index overhead was still less than 20% and we significantly reduced the cluster size for these analytics as compared to the existing competitor deployment. We think that’s pretty awesome. If you’re interested in dropping your cluster size and your licensing costs, reach out at sales@gravwell.io.

Other awesome things in the 3.0 Gravwell backend:

- Even more speed and performance!

- Auto extractors - significantly reduce query length by automatically extracting fields

- Stats search module makes complicated statistical analysis easier

- Hot failover and online replication

- Creating lookup resources is easy with ‘table -save’ in the query

- An ‘ip’ module for network filtering including a private shortcut: ‘SrcIP !~ PRIVATE’

- Windows log parsing - no more regex or xml, hooray!

- Stability improvements and distributed frontend coordination

- New ingesters including an HTTP POST ingester make it easier to get data into Gravwell for juicy analytics

How Do You Get This New Hotness?

I'm glad you asked.

For our users on the Debian repository it's as easy as:

apt update && apt upgrade

Standalone installers are available at https://dev.gravwell.io/docs/#!quickstart/downloads.md

All the upgrades will take place transparently and you'll be ready to go. If you're coming from version 1 of our GUI, you may need to spruce up your dashboard layouts but that's easier with the new drag-and-drop tile arrangement.

Gravwell in 2019

Gravwell in 2019 is focusing on improving the user experience and making it easier for our customers to get insights out of data. We get it, writing queries isn’t a whole lot of fun and not something everyone can (nor should) be doing. Our updated user interface released today is a step in that direction.

In 2019 you’ll also see us drop a package system and community marketplace for easily installing packages to handle common security needs like “Security Auditing of dnsmasq” that comes with pre-built dashboards, queries, lookup resources, and alerting. You’ll also see improvements to the natural flow of investigation that make dynamically exploring data easy for analysts of many skill levels. We’ll also continue to add more search module capabilities and visualizations as the year progresses and we fold in customer requests to our roadmap.

Stay Tuned for Details

We’ve got more posts coming that explore some of these new capabilities. We’re also going to be going through a couple case studies in security and UEBA -- like working the NOC at Supercomputing 2018 and how we helped a startup accelerate growth by monitoring their key performance indicators and kick off a naughty user that was costing them money and time.