Overview

Along with the flashier new features of Gravwell 5.0.0, we included something quietly powerful: tokens. API tokens are not a new concept; pretty much every large system has them, and now we do too. For this post we will go over the basics of Gravwell API Access Tokens and show to how use them to read Gravwell system metrics and execute queries. Then we will use the new direct search API to perform some very simple integrations with external tools that do not know anything about Gravwell or its API.

The full documentation for API tokens is available on our docs page: https://docs.gravwell.io/#!tokens/tokens.md

Token Basics

API access tokens are secret tokens that let users grant external tools access to specific Gravwell APIs without requiring a full dynamic login. For better security, each token is restricted to a user-specified set of capabilities: one token might only have access to system stats, while another could be allowed to run queries but nothing else. With API tokens, you can integrate almost any tool which supports generic webhooks/HTTP REST automations--they just need to set a request header.

There are a few important caveats around API access tokens. First and foremost: all admin-only APIs are strictly off limits. It is not possible to create an access token that can create users or admin the system; access tokens are only for general operations.

There are a few important caveats around API access tokens. First and foremost: all admin-only APIs are strictly off limits. It is not possible to create an access token that can create users or admin the system; access tokens are only for general operations.

Secondly, API access tokens can only restrict access; they cannot grant access that a user does not already have. For example, if a current user does not have the right to ingest data or upload resources, then they cannot create access tokens that can perform those operations.

API access tokens are also dynamically linked to user capabilities, which means if you create an access token that can perform an action and your account later loses access to that operation, all of your tokens also lose access.

Creating and Managing Tokens

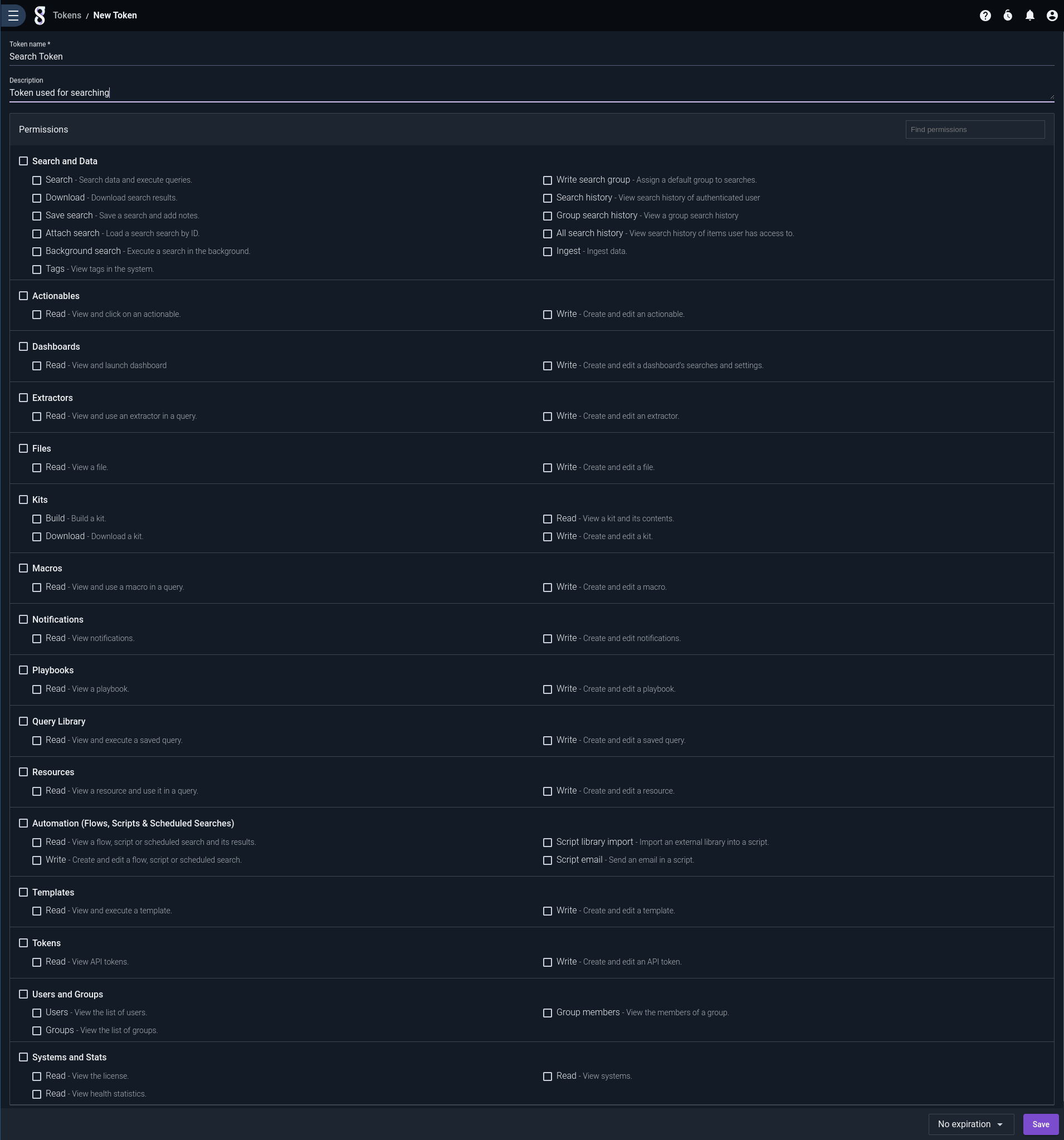

API tokens are created via the "Tools & Resources" page. This page can manage existing tokens, create new tokens, and delete/revoke existing tokens. The token creation page requires a few bits of information, specifically a name, description, and the set of API features that it should have access to.

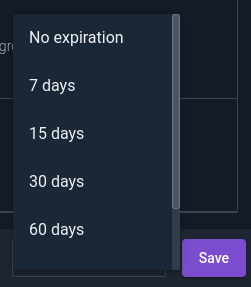

You can also specify an optional expiration date for tokens. I personally love being able to hand off time-limited access token to something knowing that they'll lose access in a week, even if I forget about it.

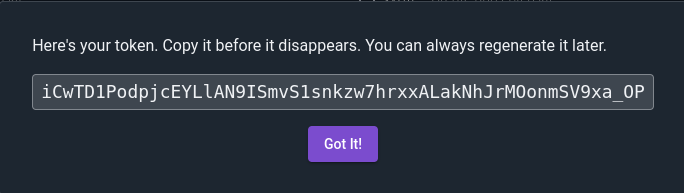

Once a token has been created, you can never again pull back the actual token value. You can modify the expiration, name, description, and even API accesses; but you can never pull back the token value. If you forget the token value it will have to be regenerated, meaning that any external tool or script using the old token will lose access. Long story short, you only get one shot at capturing the actual token value, so save it somewhere when it is displayed.

Once a token has been created, you can never again pull back the actual token value. You can modify the expiration, name, description, and even API accesses; but you can never pull back the token value. If you forget the token value it will have to be regenerated, meaning that any external tool or script using the old token will lose access. Long story short, you only get one shot at capturing the actual token value, so save it somewhere when it is displayed.

To use a token, put it in a header named Gravwell-Token on the HTTP request. For example, here is a simple HTTP GET request using curl that will pull back the current status of indexers:

Executing Queries

Gravwell 5.0.0 introduced two new APIs that make it much easier for external tools to interface with the query system. We still have a great open source client library that can drive every single piece of Gravwell, but sometimes you just want to hit a REST API and roll with some JSON.

The two new API endpoints released for 5.0.0 are /api/parse and /api/search/direct, which allow for a one-shot REST request that can parse and execute searches, respectively. The parse API lets you test a query string for valid syntax:

The parse API response will return either a block of JSON describing the parsed query, including EVS, hints, and render modules, or an error.

The search endpoint makes executing queries efficient and easy. However, be aware that the endpoint is synchronous, meaning the request will block until the query has finished and delivered all its data. If you are querying over a large time period, the API request may take a long time to finish and could potentially return gigabytes of data, so be sure to tune client timeouts and outputs accordingly.

The direct search API is designed to be an atomic thing: you execute a query and get the results back in one go. So while this allows for some really simple integrations, it also means you need to fully specify your query, time period, and how you want the data back in the REST request. The full direct query API documentation is available on our docs page here, so we won't dive into every detail of the API, but let's start with a few simple examples using curl.

Our first example just pulls the 10 most recent entries from the gravwell tag and returns the results in plain text; note the use of HTTP headers to specify the duration and format:

Our first example just pulls the 10 most recent entries from the gravwell tag and returns the results in plain text; note the use of HTTP headers to specify the duration and format:

Plain text is fine and all, but let's try something more ful--maybe pull back a pre-filtered PCAP? This query grabs the latest 1000 packets that use TCP port 80 and returns a fully formed PCAP-NG file which you can turn around and load into Wireshark (notice the extra –output /tmp/http.pcap option to redirect output to a file):

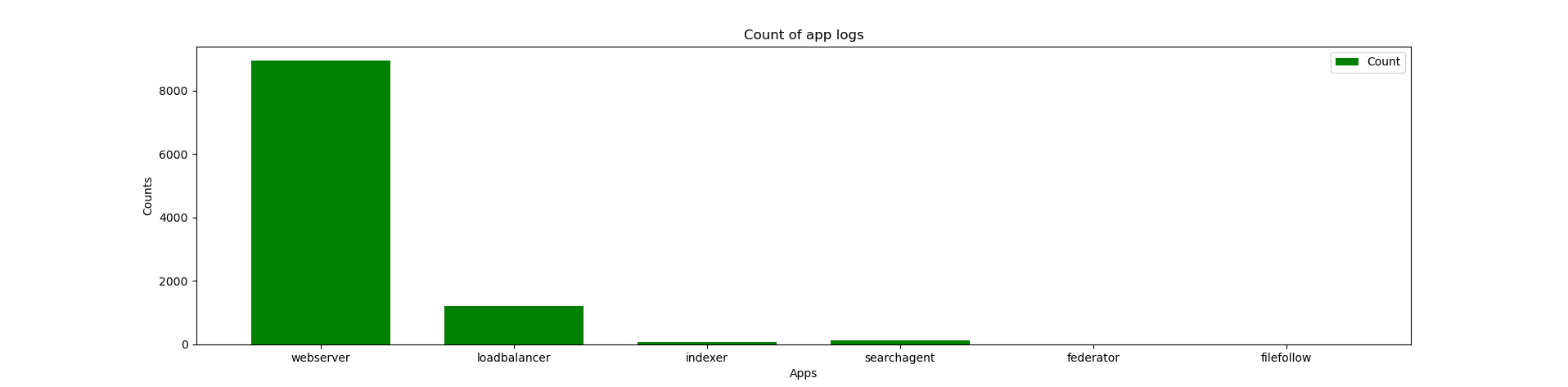

Now, for something completely different: here is a little snippet of Python that runs a query and uses the Python Matplotlib library to create a bar chart:

The REST-based API makes integration easy, and API tokens let you fine tune access. So whether you need to automatically upgrade Gravwell resources using some curl-fu, or you just want to draw some highly specialized graphs in PowerBI, the new API tokens and REST query APIs can make it easier.

Conclusion

At Gravwell we are always striving to build a product that gives you access to your data more efficiently and without the huge overhead of data normalization. Our integrated query system lets mere mortals twist and shape raw machine data into beautiful graphs and complex automations entirely within Gravwell-- but sometimes external tools do something we can't, or you just need to break out a little Python. That's where tokens and the REST APIs come in.

Join our Discord through the widget below to share what you built, or drop us a message if you run into any issues.